Key Takeaways

As businesses adopt AI, managing errors and exceptions is a critical aspect of governance.

- What is the strategy: A robust strategy involves several layers: prevention (high-quality training data), detection (the ability for the system to recognize low confidence), and resolution.

- The business impact: A good error management strategy builds resilient and trustworthy AI systems.

- The key to resolution: A “human in the loop” mechanism, where the AI can pause, flag an error, and ask a human expert for guidance, is essential.

Artificial Intelligence (AI) stands as a beacon of innovation today, yet its deployment is not without complexities. The prospect of AI managing critical business functions brings immense promise, but it also casts a spotlight on a fundamental concern: how do we address Agentic Intelligence Errors? Leaders globally are comprehending how AI systems learn from and mitigate their missteps is paramount for cultivating accuracy and reliability in enterprise automation.

This exposition aims to elucidate how AI systems navigate and assimilate lessons from their imperfections, specifically addressing the challenges of AI accuracy and trustworthiness in demanding enterprise automation contexts. It will precisely define common manifestations of AI mistakes (e.g., misinterpretation, outright fabrication), unravel the root causes of these inaccuracies (such as data limitations or inherent biases), and detail their cascading effects on user experience and operational efficiency. Furthermore, this content outlines various remediation techniques and optimal practices for bolstering AI precision and preempting future errors. In essence, it serves as an indispensable resource for deciphering the challenges and formulating robust solutions for constructing more dependable and adaptive AI systems.

Can AI Make Mistakes?

The notion that AI, despite its remarkable computational power, is infallible represents a dangerous misconception. Indeed, AI can and does make mistakes. An AI mistake isn’t a sign of fundamental failure but rather an inherent challenge in systems that learn from vast, often imperfect, real-world data and operate in unpredictable environments. These Agentic Intelligence Errors are critical focal points for responsible AI development.

The very nature of learning from data means AI will inevitably encounter situations it hasn’t perfectly generalized to, or where the data itself is flawed. Understanding this reality is the first step toward effective management and mitigation of these artificial intelligence mistakes.

Common Manifestations of AI Mistakes

Agentic Intelligence Errors can present in various forms, each with distinct implications for enterprise operations.

- Misinterpretation of Intent: An AI mistake might occur when a system fails to accurately grasp a user’s query or the context of a request. For instance, a chatbot might misinterpret a customer’s nuanced complaint, leading to irrelevant responses and frustration.

- Data Extraction Errors: AI designed to process documents might misread fields, especially in unstructured or poorly formatted documents, leading to incorrect data entry in financial systems.

- Algorithmic Bias: This insidious AI mistake arises when an AI system’s output systematically favors or disadvantages certain groups, often due to biases present in its training data. For example, a lending AI might inadvertently discriminate if trained on historical data reflecting discriminatory practices. This is a critical form of AI bias.

- Context Handling Failures: An AI mistake can emerge when a system fails to maintain context across multiple interactions or over time, leading to disjointed or illogical responses.

- “Hallucinations” (Generative AI Failures): A particularly notable AI mistake with Generative AI models is hallucination, where the AI confidently generates factually incorrect, nonsensical, or entirely fabricated information. For instance, a Generative AI might create a plausible-sounding legal reference that does not exist. These Generative AI failures pose significant risks in factual domains.

- Outdated Information: If an AI’s knowledge base isn’t continually updated, it may provide an AI mistake based on outdated facts or regulations, especially problematic in fast-evolving industries like finance.

These varied Agentic Intelligence Errors underscore the necessity for robust error management strategies.

Why Do AI Mistakes Occur? Unpacking the Root Causes

Understanding the genesis of an AI mistake is paramount for effective remediation. These inaccuracies rarely stem from malicious intent but rather from inherent complexities in AI design and deployment.

- Training Data Limitations:

- Bias in Data (AI bias): If the data used to train the AI reflects societal prejudices or contains imbalances (e.g., overrepresentation of one demographic), the AI will learn and perpetuate these biases. This is a pervasive cause of artificial intelligence mistakes.

- Insufficient Data: Lack of enough diverse data for specific scenarios means the AI may not generalize well or make accurate predictions outside its limited training set.

- Noisy or Inaccurate Data: Errors, inconsistencies, or irrelevant information within the training data directly lead to the AI learning incorrect patterns, resulting in an AI mistake.

- Algorithmic and Model Design Flaws:

- Overfitting/Underfitting: The AI model might be too complex (overfitting, memorizing noise) or too simple (underfitting, failing to capture patterns), leading to poor performance on new data.

- Lack of Interpretability (“Black Box”): Many advanced models offer limited transparency, making it difficult for humans to understand their decision-making process. This opacity hinders the ability to diagnose and fix Agentic Intelligence Errors.

- Technological and Environmental Constraints:

- Real-world Variability: AI systems often struggle when deployed in complex, unpredictable real-world environments that differ significantly from their controlled training conditions.

- Compute Limitations: Resource constraints can limit the scale or quality of AI models, leading to compromises in accuracy.

- Human-AI Interaction Issues: Miscommunication or incorrect user prompts can lead to an AI mistake, even if the AI itself is functioning correctly.

These multifaceted challenges highlight that building reliable AI is an ongoing process of refinement and robust management.

The Impact of Agentic Intelligence Errors

The consequences of an AI mistake can extend far beyond a mere operational glitch, impacting critical aspects of an enterprise.

- User Frustration and Dissatisfaction: An AI mistake in customer-facing applications (e.g., chatbots providing incorrect info) can lead to significant user frustration, eroding trust and damaging brand reputation.

- Operational Inefficiencies: Errors in automated processes (e.g., incorrect financial transactions, misrouted documents) require costly manual intervention, rework, and delays, negating the very purpose of automation.

- Financial Losses: Agentic Intelligence Errors can directly lead to monetary losses through incorrect payments, missed opportunities, or undetected fraud.

- Compliance and Regulatory Risks: Artificial intelligence mistakes, especially those stemming from AI bias or data inaccuracies, can lead to non-compliance with industry regulations and privacy laws, resulting in hefty fines and legal repercussions.

- Erosion of Trust: Repeated AI mistakes undermine confidence in the technology, leading to user skepticism and resistance to further AI adoption within the organization. This can severely impede digital transformation efforts.

Effective management of agentic intelligence errors is therefore not just a technical challenge but a strategic business imperative.

Strategies for Mitigating Agentic Intelligence Errors

Mitigating Agentic Intelligence Errors requires a multi-faceted and continuous approach, integrating technical solutions with robust governance and human oversight.

- Comprehensive Data Governance: Implement strict data quality control, ensuring training data is accurate, relevant, diverse, and free from AI bias. Regularly audit data sources and pipelines.

- Bias Detection and Mitigation Tools: Employ specialized tools and methodologies to identify and reduce AI bias within algorithms and datasets. This involves techniques like fairness metrics, re-weighting biased data, and algorithmic debiasing.

- Explainable AI (XAI): Prioritize AI models that offer transparency, allowing humans to understand the reasoning behind AI decisions. This helps in diagnosing the root cause of an AI mistake and building trust.

- Human-in-the-Loop (HITL) Systems: Design AI systems that incorporate human oversight and intervention, especially for critical decisions or complex exceptions. Humans can review, validate, and correct Agentic Intelligence Errors, and provide feedback for AI learning.

- Robust Testing and Validation: Implement continuous testing frameworks that go beyond unit tests to include adversarial testing (stress-testing AI with deliberately misleading inputs) and real-world scenario simulations to expose potential artificial intelligence mistakes.

- Real-time Monitoring and Feedback Loops: Continuously monitor AI system performance in production. Establish mechanisms for immediate feedback when an AI mistake occurs, allowing for rapid corrections and retraining.

- Dynamic Knowledge Base Updates: For AI systems that rely on knowledge bases, ensure they are regularly updated with the latest information to prevent AI mistakes due to outdated data.

- Fallback Responses: For conversational AIs, implement graceful fallback mechanisms (e.g., escalating to a human agent) when the AI cannot confidently interpret intent or resolve a query, preventing Generative AI failures from frustrating users.

These strategies collectively contribute to building more reliable and trustworthy AI systems.

Trustworthy, Hallucination-Free AI Automation with Kognitos

While managing agentic intelligence errors is a complex endeavor, Kognitos is a safe AI automation platform uniquely positioned to deliver trustworthy and hallucination-free AI automation solutions for large enterprises. Unlike traditional Robotic Process Automation (RPA)—which is rigid and prone to failure with exceptions—or generic AI platforms that often struggle with accuracy and interpretability, Kognitos offers a fundamentally different approach.

Kognitos minimizes Agentic Intelligence Errors and prevents Generative AI failures by:

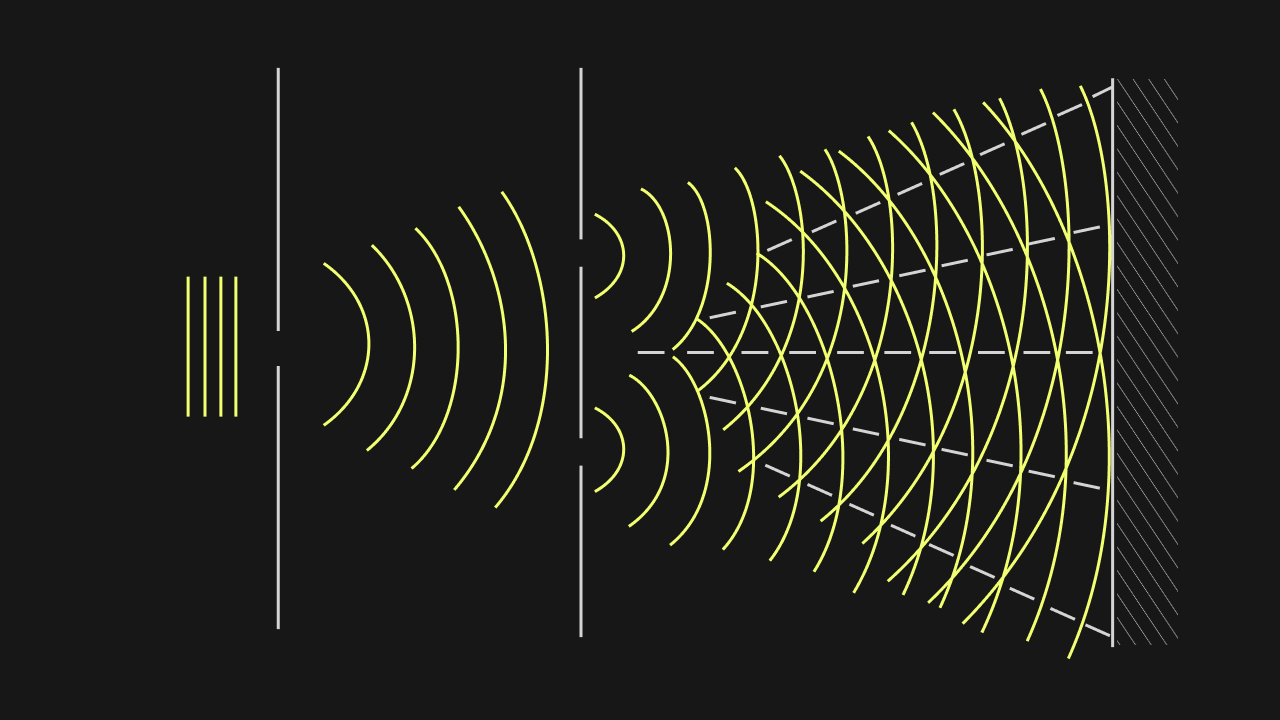

- Neuro-Symbolic AI Approach: Kognitos combines the power of large language models (LLMs) with symbolic reasoning. This hybrid approach allows Kognitos to leverage the contextual understanding of LLMs while ensuring factual accuracy and logical consistency through symbolic rules, fundamentally reducing the likelihood of an AI mistake or hallucination.

- Natural Language-Driven Precision: Business users define processes in plain English. Kognitos’s AI reasoning engine interprets this intent with precision, translating it into executable automation without misinterpretation. This direct human-to-AI communication reduces the chance of an AI mistake introduced by complex coding or abstract modeling.

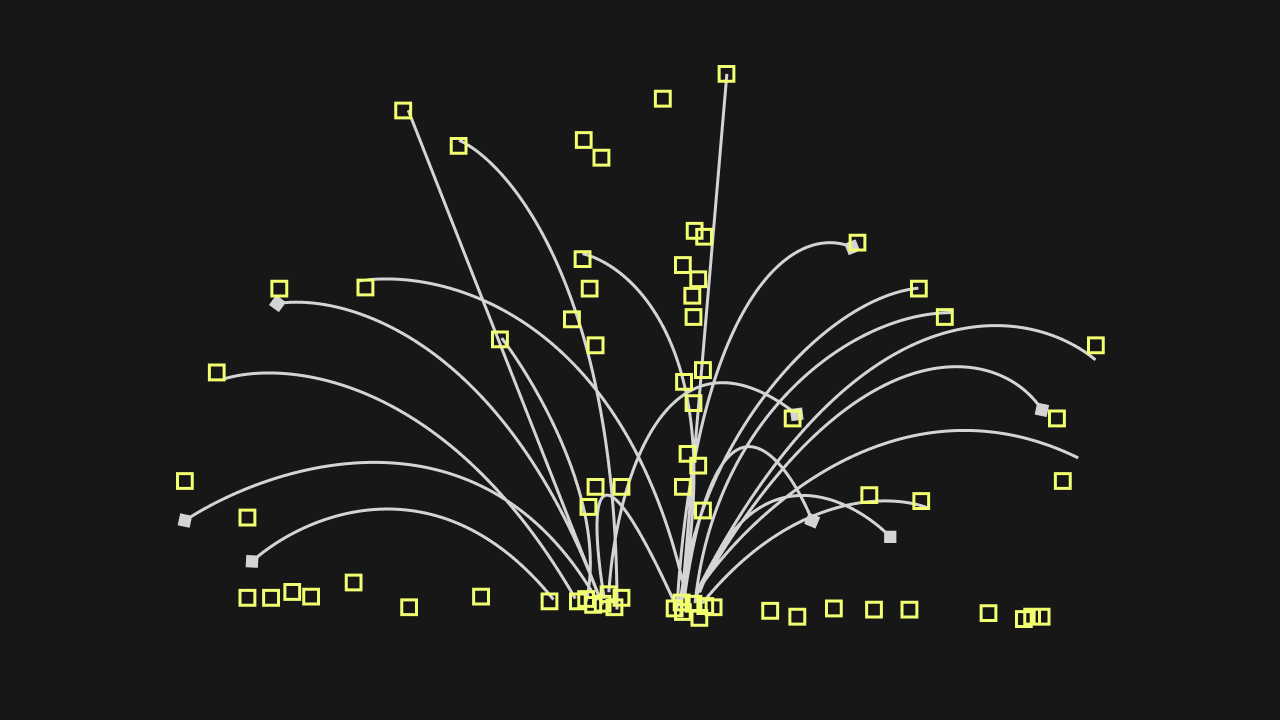

- Patented Exception Handling: Kognitos is built to manage the unexpected. Its unique exception handling capabilities allow its AI agents to intelligently detect, diagnose, and resolve unforeseen Agentic Intelligence Errors or deviations in real-time. If human judgment is needed, it seamlessly integrates human-in-the-loop for oversight, ensuring control and minimizing artificial intelligence mistakes.

- Built-in Data Validation and Governance: Kognitos focuses on ensuring the integrity of data it processes. Its AI capabilities include robust data validation, reducing AI mistakes stemming from inaccurate or inconsistent inputs.

- Enterprise-Grade Reliability: Kognitos is engineered for the rigor and compliance demands of large organizations. Its commitment to controllable and hallucination-free AI ensures that the automation is reliable and trustworthy, even for sensitive financial or operational processes.

By providing truly intelligent, adaptive, and reliable automation, Kognitos empowers enterprises to overcome the challenges of managing Agentic Intelligence Errors, driving unparalleled efficiency and trust in their AI initiatives.

The Future of Trustworthy Automation

The future of enterprise automation hinges on the ability to effectively manage Agentic Intelligence Errors. As AI systems become more autonomous and integrate deeper into core business functions, the focus will shift from merely deploying AI to deploying trustworthy AI. The continuous development of advanced AI architectures, coupled with robust governance frameworks and platforms like Kognitos that prioritize accuracy and reliability, will define this future.

Organizations that proactively invest in solutions designed to minimize AI mistakes and prevent Generative AI failures will gain a significant competitive advantage. They will leverage AI not just for efficiency, but as a reliable partner that consistently delivers accurate outcomes, fostering greater confidence and unlocking the full transformative potential of intelligent automation.

Discover the Power of Kognitos

Our clients achieved:

- 97%reduction in manual labor cost

- 10xfaster speed to value

- 99%reduction in human error

Yes, AI can certainly make mistakes. Despite their advanced capabilities, AI systems are not infallible. An AI mistake can arise from various sources, such as flaws in their training data, biases within algorithms, misinterpretations of complex inputs, or unexpected real-world scenarios. Addressing these Agentic Intelligence Errors is crucial for reliable AI deployment.

The frequency with which an AI mistake occurs varies widely, depending on the AI system’s complexity, the quality of its training data, its specific task, and the environment in which it operates. Simple, rule-based AI might rarely err within its narrow scope, while advanced AI, especially those handling nuanced, real-world data, might exhibit Agentic Intelligence Errors more frequently if not meticulously managed and continually refined through learning processes.

Examples of AI mistakes include a chatbot misinterpreting a user’s intent leading to irrelevant responses, facial recognition systems exhibiting bias against certain demographics, autonomous vehicles misidentifying objects in complex weather, or Generative AI producing ‘hallucinations’—factually incorrect but confidently presented information. Such Agentic Intelligence Errors highlight the need for robust oversight.

AI bias refers to systematic and repeatable errors in an AI system’s output that create unfair or discriminatory outcomes. This often originates from biases present in the data used to train the AI (e.g., historical societal biases reflected in datasets) or from flawed algorithmic design. Addressing AI bias is critical for ensuring ethical and equitable deployment of AI, as it is a common cause of artificial intelligence mistakes.

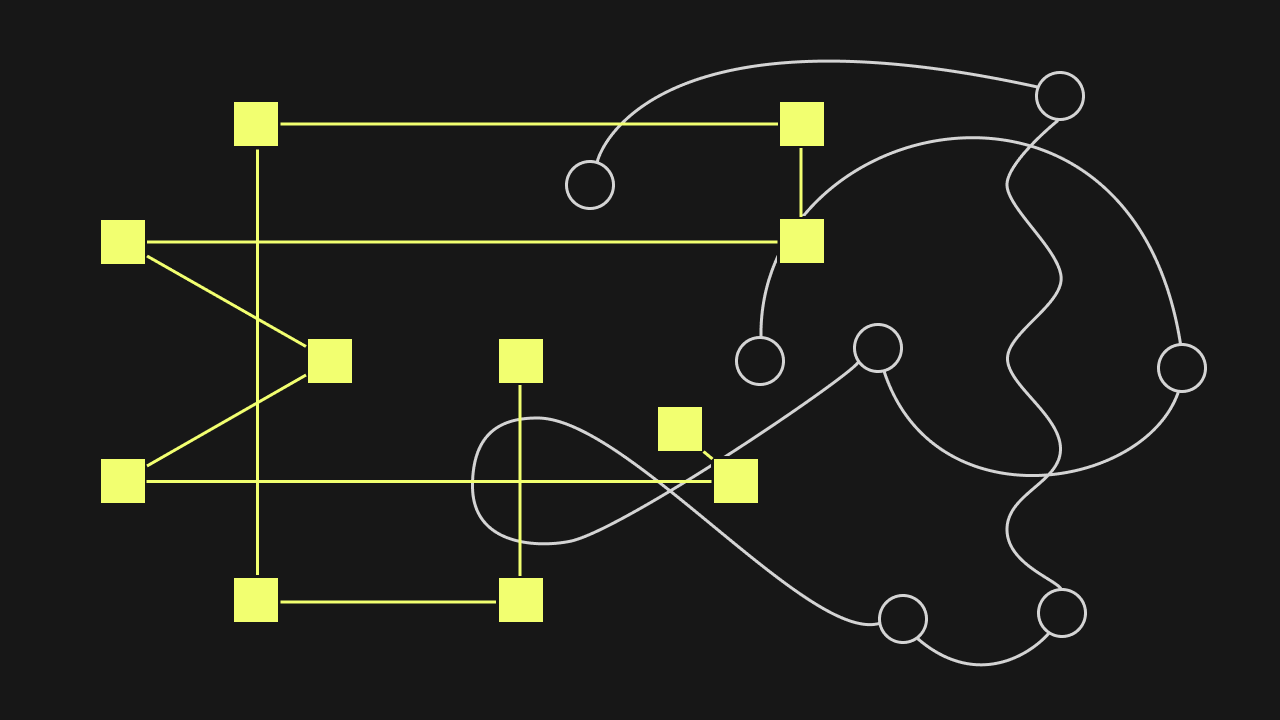

Broadly, problems in AI can be categorized into three types: 1. Data-related problems: Issues stemming from insufficient, biased, noisy, or irrelevant training data, leading to an AI mistake. 2. Algorithmic/Model problems: Flaws in the AI model’s design, training methodology, or its inability to generalize effectively to new situations. 3. Deployment/Interaction problems: Challenges arising from how AI interacts with real-world environments and human users, leading to misinterpretations or unintended consequences, often manifesting as Agentic Intelligence Errors.

Yes, generative AI can make mistakes, often quite distinctly. A common type of AI mistake unique to generative models is ‘hallucination,’ where the AI fabricates information that sounds plausible but is factually incorrect or nonsensical. These Generative AI failures can also include generating biased content, producing irrelevant outputs, or failing to adhere to specific constraints or safety guidelines, underscoring the need for careful oversight.

Estimates for AI project failure rates vary widely, but many industry reports suggest a significant percentage, with figures often ranging from 50% to over 80%. These failures can stem from various sources, including poor data quality, unrealistic expectations, lack of proper governance, difficulty scaling, and the inability to effectively manage and learn from Agentic Intelligence Errors or other artificial intelligence mistakes during development and deployment.