Key Takeaways

This article provides a new blueprint for AI compliance, arguing that “bolted-on” governance is a failing strategy.

- What is the story: It explains that new regulations (like the EU AI Act) and the risks of “black box” AI (which are non-auditable and can “hallucinate”) make traditional AI unsafe for regulated processes. It presents a new model where compliance is built into the AI’s core.

- The business impact: This “compliant by design” approach allows leaders to automate high-risk processes like SOX and AML. It delivers a 100% auditable, deterministic, and transparent system, solving the core risks that block AI adoption and innovation.

- The key contrast: It showcases the difference between high-risk, opaque generative AI and a “compliant by design” platform. Kognitos’s “English as Code” and “neurosymbolic” architecture provides a 100% transparent, “hallucination-free,” and auditable system, as opposed to a “black box” that is fundamentally ungovernable.

The central challenge with enterprise AI is that businesses need to innovate to stay competitive, while also facing considerable risks in doing so. Leaders are in a tough spot. They are rightfully concerned about the black box problem, regulatory penalties from new regulations on artificial intelligence, and the catastrophic risk of AI hallucinations in critical business processes.

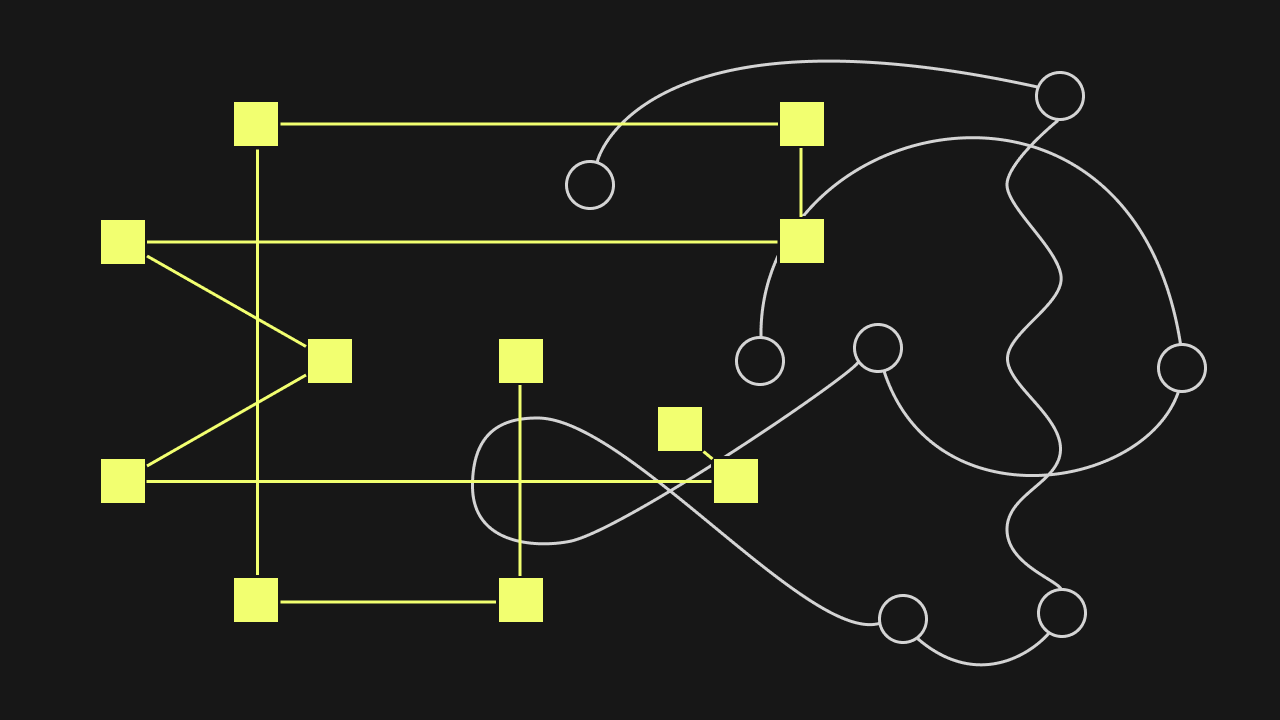

Historically, the industry’s answer has been to bolt on governance–to layer review tools, checks, and manual oversight after an opaque AI process runs. This is a strategy for failure. It’s reactive, expensive, and fundamentally unsafe.

This article redefines the playbook for AI compliance. We will argue that governance isn’t a feature you add later; it must be built into the core of the AI itself. A new class of intelligent automation, managed in natural language by your own compliance and operations experts, provides the solution. This English as Code approach delivers an immutable, human-readable audit trail for every action, creating a system that is transparent, deterministic, and governable by its very design. This is your AI compliance guide to building an autonomous and provably compliant operational core.

The New Regulatory Landscape

You cannot build a strategy for AI in compliance without first understanding the new regulatory minefield. Around the world, governments are moving quickly to manage the risks of AI. While specific regulations on artificial intelligence differ, they share a common DNA.

1. The Global Gold Standard: The EU Artificial Intelligence Act

The EU Artificial Intelligence Act is the most comprehensive framework to date. It establishes a risk-based approach: the higher the risk of the AI system, the stricter the rules.

- High-Risk Systems: This category includes AI used in critical infrastructure, credit scoring, hiring, and legal interpretation. These systems (which cover many back-office operations) will face mandatory requirements for:

- Transparency: Users must know they are interacting with an AI.

- Human Oversight: Robust human-in-the-loop controls must be in place.

- Data Governance: Strict rules on data quality and management.

- Auditability: The ability to provide a full log of the AI’s decision-making process. The penalties for non-compliance are severe, reaching up to 7% of global annual revenue. This has set a high bar for all global AI compliance standards.

2. The U.S. Approach: A Patchwork of Principles and Rules

In the United States, the landscape is a mix of federal guidelines and emerging state-level laws.

- U.S. AI principles have been outlined in the Blueprint for an AI Bill of Rights, which stresses that automated systems should be safe, effective, and transparent.

- U.S. AI legislation is being introduced at both the state and federal levels. New York City, for example, has already implemented rules (Local Law 144) requiring bias audits for AI-powered hiring tools. This patchwork of new regulations on artificial intelligence makes it clear that a single, governable approach is the only sustainable path forward.

All these new regulations on artificial intelligence boil down to a few key demands: transparency, auditability, and human accountability. This is a direct challenge to the black box model of AI.

The Black Box Problem

The generative AI tools are often, by their nature, non-compliant. They fail on two fundamental levels.

1. The Black Box and Auditability

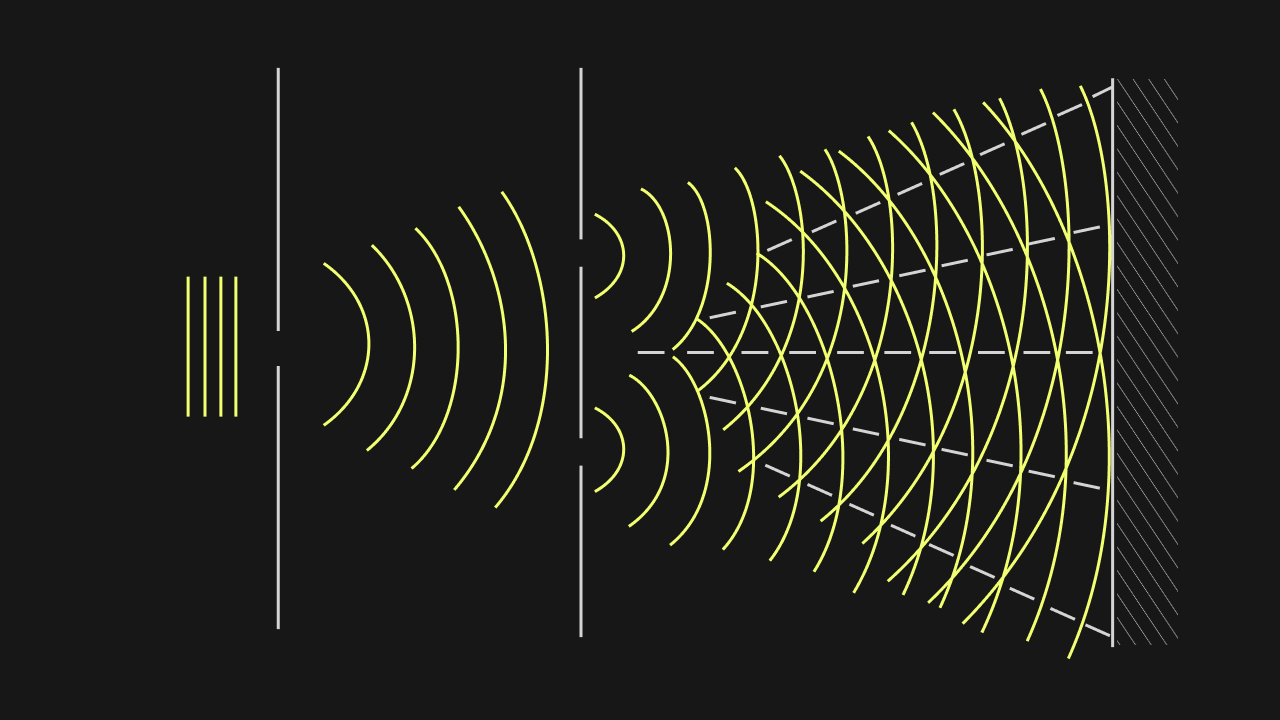

A black box AI is a system where even its creators cannot definitively explain why it produced a specific output. It’s a complex web of probabilities. You cannot ask a generative AI “What rule did you follow to approve this journal entry?” It can’t tell you.

- The Compliance Risk: This is an auditor’s nightmare. How do you prove to a regulator that your AI-powered SOX control is working correctly? How do you defend an AI-driven compliance decision in court? You can’t. This lack of traceability makes most generative AI a non-starter for many compliance processes.

2. Hallucinations and Determinism

Generative AI is designed to be creative, which means it can “hallucinate” or invent information. This is a feature for marketing copy, but a catastrophic bug for a financial process.

- The Compliance Risk: An AI that “invents” a number in a financial report, “imagines” a compliance check that never happened, or “hallucinates” a reason for flagging a transaction is an unacceptable liability. AI compliance standards require determinism- the guarantee that the same input will produce the same, correct output every time.

AI Compliance by Design with Kognitos

The only way to solve this is to stop using bolted-on fixes and instead use an AI platform that is compliant by its very design. This is the new frontier for AI in compliance and the core philosophy behind Kognitos.

Kognitos is built to be transparent, auditable, and deterministic from the ground up. It achieves this through a unique combination of technologies designed for high-stakes, regulated industries.

1. English as Code Solves the Black Box Problem

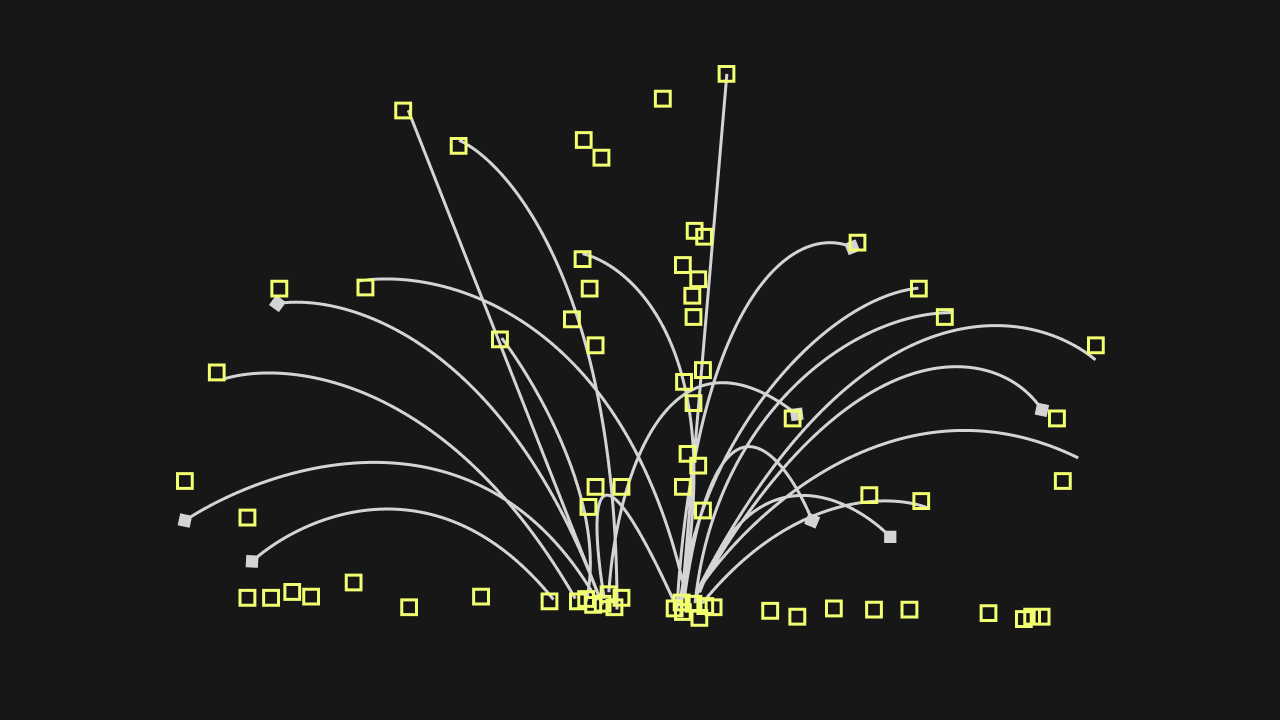

The biggest challenge for AI in compliance is auditability. Kognitos solves this with English as Code. Instead of developers writing complex code, your business and compliance experts define their own automation in plain, natural language.

- How it Works: A process is described by a knowledgeable user and then programmed using English as code.

- Example: “For SOX compliance, review all journal entries over $100,000. Verify that each entry has an attached supporting document. If a document is missing, escalate the entry to the ‘Finance Controller’ for review.”

- The Compliance Impact: The English description is the automation. This creates a perfect, human-readable, and immutable audit trail for every action. An auditor can read the process in English and see the exact logic that was executed. The “black box” is eliminated. This directly satisfies the transparency mandates of the EU Artificial Intelligence Act.

2. Neurosymbolic AI Solves for Hallucinations

To be used for AI in compliance, a system must be 100% reliable. Kognitos is built on a neurosymbolic architecture, which is a key differentiator from other AI compliance companies.

- How it Works: It combines the language understanding of modern AI (the neuro part) with the deterministic, logical reasoning of classical AI (the symbolic part).

- The Compliance Impact: This makes Kognitos’s automations hallucination-free by design. It cannot guess or invent data. It is grounded in the English-language rules you provide and follows them with 100% precision. This deterministic behavior is the only acceptable standard for any high-risk AI in compliance workflow. Like traditional programming, it can run countless times without a single mistake, error, or inaccurate guess.

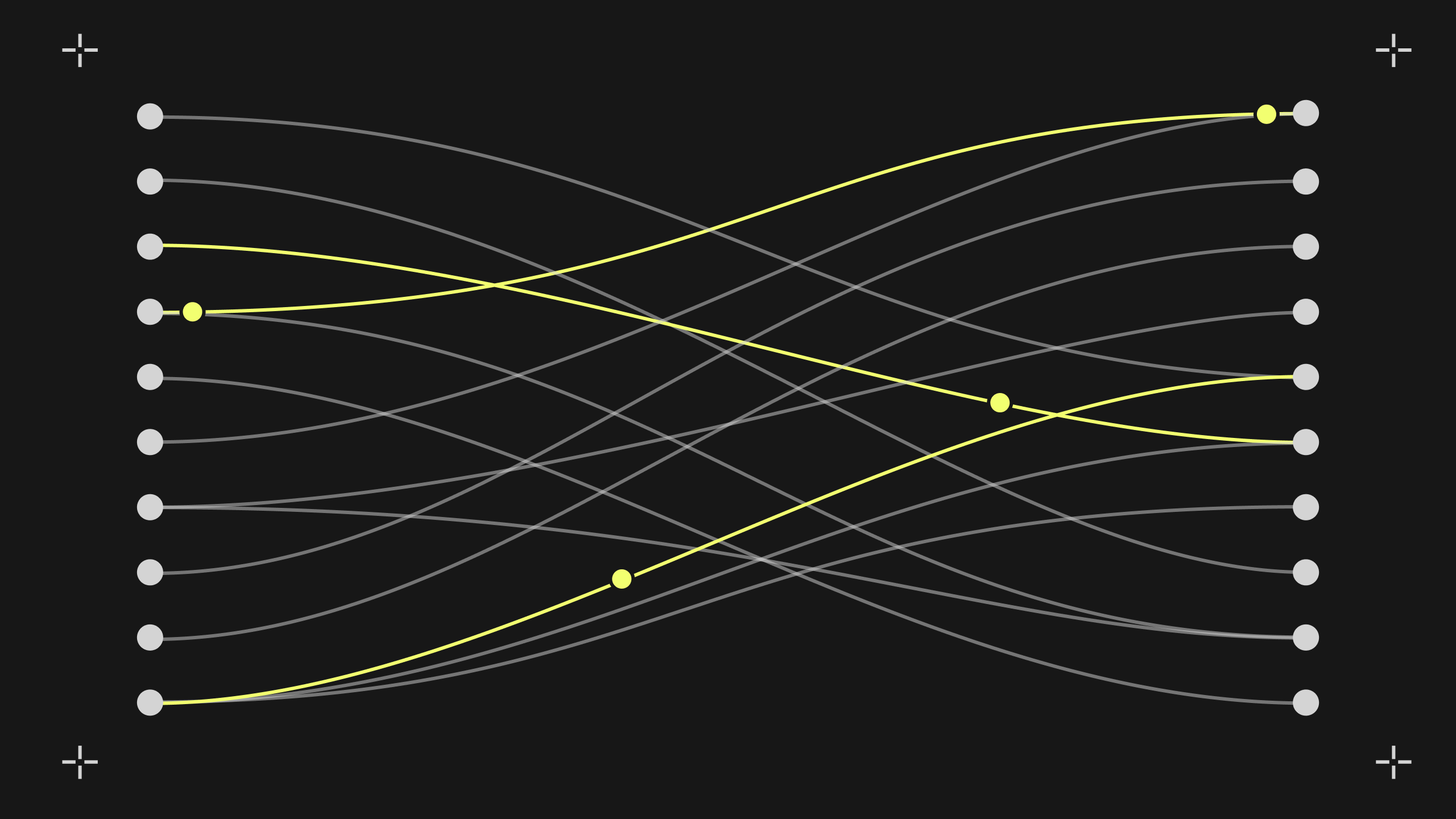

3. Built-in Exception Handling Solves for Human Oversight

Nearly all regulations on artificial intelligence, including U.S. AI principles, mandate robust human oversight.

- How it Works: Kognitos is built for real-world exceptions. When it encounters a situation not covered by the English instructions (e.g., a new document type, a compliance flag it doesn’t recognize), it doesn’t fail or make a risky guess. It pauses and uses its Guidance Center to ask the designated human expert for instructions.

- The Compliance Impact: This is a practical, built-in governance model. It keeps humans in the loop for critical judgments, ensuring a human is always in control. This directly satisfies the “human oversight” mandate of all emerging AI compliance standards.

Leveraging AI in Regulatory Compliance

When you have a provably compliant AI platform, you can confidently automate your most critical processes. Using AI in compliance moves from a risk to a strategic advantage.

- Automated SOX Compliance: Instead of your team spending weeks manually gathering evidence for auditors, you can build an automated SOX agent. An auditor can simply write, “Gather all new vendor setup records from Q3. Verify that each one has a corresponding, validated W-9. Flag any that do not and send the list to the Head of AP.” Kognitos performs the check and provides a perfect, auditable log.

- Intelligent Anti-Money Laundering (AML)/Know Your Customer (KYC): Instead of a black-box AI flagging transactions, you can automate the investigation process in English. “When a transaction over $10,000 is flagged, cross-reference the client’s name against the new sanctions list. Review their transaction history for the last 90 days. Summarize the findings and escalate to a Level 2 Compliance Officer.”

This is the future of AI in compliance. It’s not just about using AI to check for compliance; it’s about building your core operational processes on an AI platform that is natively compliant.

Innovate with Confidence

The central challenge of AI adoption is solved. You do not have to choose between innovation and compliance. The new global regulations on artificial intelligence are not a barrier to automation; they are a guide to doing it correctly.

Attempting to use black box AI for regulated processes is a risk that is no longer worth taking. The new generation of AI compliance companies understands this. The future belongs to platforms that are transparent, deterministic, and governable by design. By building your autonomous core on a foundation of plain English and auditable logic, you can finally move beyond the “black box” and innovate with 100% confidence.

Discover the Power of Kognitos

Our clients achieved:

- 97%reduction in manual labor cost

- 10xfaster speed to value

- 99%reduction in human error

AI compliance is the process of ensuring that an organization’s development and use of artificial intelligence systems adhere to all relevant laws, regulations on artificial intelligence, and ethical guidelines. It involves managing data governance, ensuring transparency and fairness, mitigating bias, and maintaining the ability to audit and explain an AI’s decisions to regulators.

The importance of AI compliance is twofold:

- Risk Mitigation: Non-compliance with new laws like the EU Artificial Intelligence Act can result in massive fines (up to 7% of global revenue), reputational damage, and legal action.

Trust and Adoption: Proving that your AI systems are fair, secure, and transparent builds trust with customers, partners, and internal stakeholders, which is essential for successful adoption.

A key emerging AI compliance standard is ISO/IEC 42001. It is the first international management system standard for Artificial Intelligence. It provides a framework for organizations to manage the risks and opportunities associated with AI, helping them establish a formal AI governance structure, manage the AI lifecycle, and demonstrate a commitment to responsible and ethical AI development.

The consequences are severe and growing. They include:

- Massive Financial Penalties: As seen with the EU Artificial Intelligence Act, fines can be large enough to significantly impact a company’s bottom line.

- Legal Liability: Companies can be held legally responsible for discriminatory or harmful decisions made by their AI.

- Loss of Market Access: Non-compliant AI systems may be banned from certain markets (like the EU).

Reputational Damage: A public failure in AI ethics or compliance can destroy customer trust.

The biggest challenges are the black box problem (inability to explain an AI’s decisions), the risk of AI hallucinations (inventing data), ensuring data privacy, and the complexity of new and evolving regulations on artificial intelligence. A primary challenge is that most AI tools were not built with compliance in mind.

You can use AI in compliance in two ways. First, as a tool to monitor for regulatory changes or flag non-compliant transactions. Second, and more powerfully, you can use a compliant AI platform (like Kognitos) to run your core business processes (like financial controls, claims processing, or supplier onboarding) in a way that is 100% transparent, auditable, and aligned with all U.S. AI principles and global regulations.